by Paul Curzon, Queen Mary University of London

People often suggest neurodiverse people make good computer scientists. For example, one of the most famous autistic people, Temple Grandin, an academic at Colorado State University and animal welfre expert, has suggested programming is one of the jobs autistic people are potentially naturally good at (along with other computer science linked jobs) and that “Half the people in Silicon Valley probably have autism.”.. So what makes a good computer scientist? And why might people suggest neurodiverse people are good at it?

What makes a good programmer? Is it knowledge, skills or is it the type of person you are? It is actually all three though it’s important to realise that all three can be improved. No one is born a computer scientist. You may not have the knowledge, the skills or be the right kind of person now, but you can improve them all.

To be a good programmer, you need to develop specialist knowledge such as knowing what the available language constructs are, knowing what those constructs do, knowing when to use them over others, and so on. You also need to develop particular technical skills like an ability to decompose problems into sub-problems, to formulate solutions in a particular restricted notation (the programming language), to generalise solutions, and so on. However, you also need to become the right kind of person to do well as a programmer.

Thinking about what kind of person makes a good programmer, to help my students work on them and so become better programers, I made a list of the attributes I associate with good student programmers. My list includes: attention to detail, an ability to think clearly and logically, being creative, having good spatial visualising skills, being a hard worker, being resilient to things going wrong so determined, being organised, being able to meet deadlines, enjoying problem solving, being good at pattern matching, thinking analytically and being open to learning new and different ways of doing things.

More recently, when taking part in a workshop about neurodiversity I was struck by a similar list we were given. Part of the idea behind ‘neurodiversity’ is that everyone is different and everyone has strengths and weaknesses. If you think of ‘disability’ you tend to think of apparent weaknesses. Those ‘weaknesses’ are often there because the world we have created has turned them into weaknesses. For example, being in a wheelchair makes it hard to travel because we have built a world full of steps, kerbs and cobbles, doors that are hard to manipulate, high counters and so on. If we were to remove all those obstacles, a wheelchair would not have to reduce your ability to get around. Thinking about neurodiversity, the suggestion is to think about the strengths that come with it too, not just the difficulties you might encounter because of the way we’ve made the world.

The list of strengths of neurodiverse people given at the workshop were: attention to detail, focussed interest, problem-solving, creative, visualising, pattern recognition. Looking further you find both those positives reinforced and new positives. For example, one support website gives the positives of being an autistic person as: attention to detail, deep focus, observation skills, ability to absorb and retain facts, visual skills, expertise, a methodological approach, taking novel approaches, creativity, tenacity and resilience, accepting of difference and integrity. Thinking logically is also often picked out as a trait that neurodiverse people are often good at. The similarity of these lists to my list of what kind of person my students should aim to turn themselves into is very clear. Autistic people can start with a very solid basis to build on. If my list is right, then their personal positives may help neurodiverse people to quickly become good programmers.

Here are a few of those positives others have picked out that neurodiverse people may have and how they relate to programming:

Attention to detail: This is important in programming because a program is all about detail, both in the syntax (not missing brackets or semicolons) but more importantly not missing situations that could occur so cases the program must cover. A program must deal with every possibility that might arise, not just some. The way it deals with them also matters in the detail. Poor programs might just announce a mistake was made and shut down. A good program will explain the mistake and give the user a way to correct it for example. Detail like that matters. Attention to detail is also important in debugging as bugs are just details gone wrong.

Resilience and determination: Programming is like being on an emotional roller coaster. Getting a program right is full of highs and lows. You think it is working and then the last test you run shows a deep flaw. Back to the drawing board. As a novice learning it is even worse. The learning curve is steep as programming is a complex skill. That means there are lots of lows and seemingly insurmountable highs. At the start it can seem impossible to get a program to even compile never mind run. You have to keep going. You have to be determined. You have to be resilient to take all the knocks.

Focussed interest. Writing a program takes time and you have to focus. Stop and come back later and it will be so much harder to continue and to avoid making mistakes. Decomposition is a way to break the overall task into smaller subtasks, so methods to code, and that helps, once you have the skill. However, even then being able to maintain your focus to finish each method, so each subtask, makes the programming job much easier.

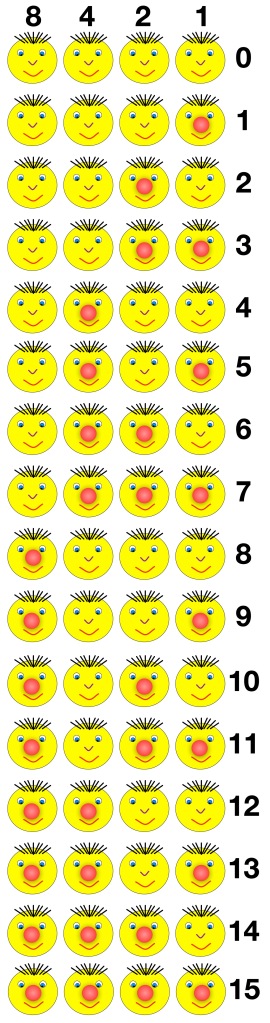

Pattern recognition: Human expertise in anything ultimately comes down to pattern matching new situations against old. It is the way our brains work. Expert chess players pattern match situations to tell them what to do, and so do firefighters in a burning building. So do expert programmers. Initially programming is about learning the meaning of programming constructs and how to use them, problem solving every step of the way. That is why the learning curve is so steep. As you gain experience though it becomes more about pattern matching: realising what a particular program needs at this point and how it is similar to something you have seen before then matching it to one of many standard template solutions. Then you just whip out the template and adapt it to fit. Spot something is essentially a search task and you whip out a search algorithm to solve it. Need to process a 2 dimensional array – you just need the rectangular for loop solution. Once you can do that kind of pattern matching, programming becomes much, much simpler.

Creativity and doing things in novel ways: Writing a program is an act of creation, so just like arts and crafts involves creativity. Just writing a program is one kind of creativity, coming up with an idea for a program or spotting a need no one else has noticed so you can write a program that fills that need requires great creativity of a slightly different kind. So does coming up with a novel solution once you have a novel problem. Developing new algorithms is all about thinking up a novel way of solving a problem and that of course takes creativity. Designing interfaces that are aesthetically pleasing but make a task easier to do takes creativity. If you can think about a problem in a different way to everyone else, then likely you will come up with different solutions no one else thought of.

Problem solving and analytical minds: Programming is problem solving on steroids. Being able to think analytically is an important part of problem solving and is especially powerful if combined with creativity (see above). You need to be able to analyse a problem, come up with creative solutions and be able to analyse what is the best way of solving it from those creative solutions. Being analytical helps with solid testing too.

Visual thinking: research suggests those with good visual, spatial thinking skills make good programmers. The reasons are not clear, but good programs are all about clear structure, so it may be that the ability to easily see the structure of programs and manipulate them in your head is part of it. That is part of the idea of block-based programming languages like Scratch and why they are used as a way into programming for young children. The structure of the program is made visual. Some paradigms of programming are also naturally more visual. In particular object-oriented programming sees programs as objects that send messages to each other and that is something that can naturally be visualised. As programs become bigger that ability to still visualise the separate parts and how they work as a whole is a big advantage.

A methodological approach: Novice programmers just tinker and hack programs together. Expert programmers design them. Many people never seem to get beyond the hacking stage, struggling with the idea of following a method to design first, yet it is vital if you are to engineer serious programs that work. That doesn’t mean that programming is just following methods, tinkering can be part of the problem solving and coming up with creative ideas, but it should be used within rigorous methodology not instead of it. More time is also spent by good programming teams testing programs as writing them, and that takes rigorous methods to do well too. Software engineering is all about following rigorous methods precisely because it is the only way to develop real programs that work and are fit for purpose. Vast amounts of software written is never used because it is useless. Rigorous methods give a way to avoid many of the problems.

Logical thinking: Being able to think clearly and logically is core to programming. It combines some of the things above like attention to detail, and thinking clearly and methodologically. Writing programs is essentially the practice of applied logic as logic underpins the semantics (ie meaning) of programming languages and so programs. You have to think logically when writing programs, when testing them and when debugging them. Computers are machines built from logic and you need to think in part like a computer to get it to do what you want. A key part of good programming is doing code walkthroughs – as a team stepping through what a program does line by line. That requires clear logical thinking to think in teh steps the computer performs.

I could go on, but will leave it there. The positives that neurodiverse people might have are very strongly positives for becoming a good programmer. That is why some of the best students I’ve had the privilege to teach have been neurodiverse students.

Different people, neurodiverse or otherwise, will start with different positives, different weaknesses. People start in different places for different reasons, some ahead, some behind. I liked doing puzzles as a child, so spent my childhood devouring logic and algorithmic puzzles. That meant when I first tried to learn to program, I found it fairly easy. I had built important skills and knowledge and had become a good logical thinker and problem solver just for fun. I learnt to program for fun. That meant it was if I’d started way beyond the starting line in the race to become a programmer. Many neurodiverse people do the same, if for different reasons.

Other skills I’ve needed as a computer scientist I have had to work hard on and develop strategies to overcome. I am a shy introvert. However, I need to both network and give presentations as a computer scientist (and ultimately now I give weekly lectures to hundreds at a time as an academic computer scientist). For that I had to practice, learn theory about good presentation and perhaps most importantly, given how paralysing my shyness was, devise a strategy to overcome that natural weakness. I did find a strategy – I developed an act. I have a fake extrovert persona that I act out. I act being that other person in these situations where I can’t otherwise cope, so it is not me you see giving presentations and lectures but my fake persona. Weaknesses can be overcome, even if they mean you start far behind the starting line. Of course, some weaknesses and ways we’ve built the world mean we may not be able to overcome the problems, and not everyone wants to be a computer scientist anyway so has the desire to. What matters is finding the future that matches your positives and interests, and where you can overcome the weaknesses where you set your mind to it.

Programming is not about born talent though (nothing is). We all have strengths and weaknesses and we can all become better by practicing and finding strategies that work for us, building upon our strengths and working on our weaknesses, especially when we have the help of a great teacher (or have help to change the way the world works so the weaknesses vanish).

My list above gives some of the key personal characteristics you need to work on improving (however good at them you are or are not right now) If you do want to be a good programmer. Anyone can become better at programming than they are if they have that desire. What matters is that you want to learn, are willing to put the practice in, can develop strategies to overcome your initial weaknesses, and you don’t give up. Neurodiverse people often have a head start on those personal attributes for becoming good at new things too.

More on …

Magazines …

EPSRC supports this blog through research grant EP/W033615/1,