by Paul Curzon, Queen Mary University of London

What makes a good environment for child AI learning development? Possibly the same as for human child learning development: Minecraft.

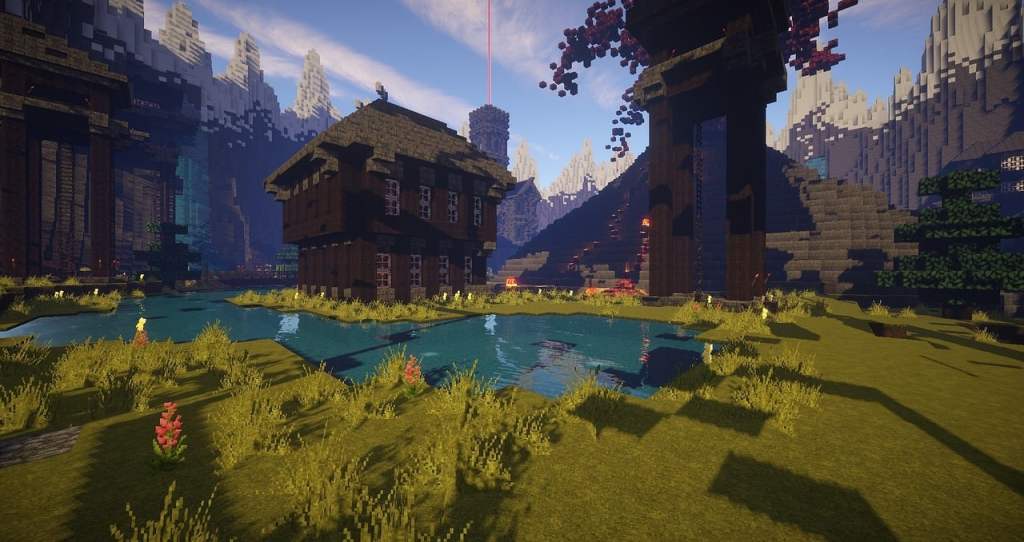

Lego is one of the best games to play for impactful learning development for children. The word Lego is based on the words Play and Well in Danish. In the virtual world, Minecraft has of course taken up the mantle. A large part of why they are wonderful games is because they are open-ended and flexible. There are infinite possibilities over what you can build and do. They therefore help encourage not just focussing on something limited to learn as many other games do, but support open-ended creativity and so educational development. Given how positive it can be for children, it shouldn’t be surprising that Minecraft is now being used to help AIs develop too.

Games have long been used to train and test Artificial Intelligence programs. Early programs were developed to play and ultimately beat humans at specific games like Checkers, Chess and then later Go. That mastered they started to learn to play individual arcade games as a way to extend their abilities. A key part of our intelligence is flexibility though, we can learn new games. Aiming to copy this, the AIs were trained to follow suit and so became more flexible, and showed they could learn to play multiple arcade games well.

This is still missing a vital part of our flexibility though. The thing about all these games is that the whole game experience is designed to be part of the game and so the task the player has to complete. Everything is there for a reason. It is all an integral part of the game. There are no pieces at all in a chess game that are just there to look nice and will never, ever play a part in winning or losing. Likewise all the rules matter. When problem solving in real life, though, most of the world, whether objects, the way things behave or whatever, is not there explicitly to help you solve the problem. It is not even there just to be a designed distractor. The real world also doesn’t have just a few distractors, it has lots and lots. Looking round my living room, for example, there are thousands of objects, but only one will help me turn on the tv.

AIs that are trained on games may, therefore, just become good at working in such unreal environments. They may need to be told what matters and what to ignore to solve problems. Real problems are much more messy, so put them in the real world, or even a more realistic virtual world, to problem solve and they may turn out to be not very clever at all. Tests of their skills that are based on such tasks may not really test them at all.

Researchers at the University of Witwatersrand in South Africa decided to tackle this issue, but using yet another game: Minecraft. Because Minecraft is an open-ended virtual world, tackling challenges created in it will involve working in a world that is much more than just about the problem itself. The Witwatersrand team’s resulting MinePlanner system is a collection of 45 challenges, some easy, some harder. They include gathering tasks (like finding and gathering wood) and building tasks (like building a log cabin), as well as tasks that include combinations of these things. Each comes in three versions. In the easy version nothing is irrelevant. The medium version contains a variety of extraneous things that are not at all useful to the task. The hard version is in a full Minecraft world where there are thousands of objects that might be used.

To tackle these challenges an AI (or human) needs to solve not just the complex problem set, but also work out for themselves what in the Minecraft world is relevant to the task they are trying to perform and what isn’t. What matters and what doesn’t?

The team hope that by setting such tests they will help encourage researchers to develop more flexible intelligences, taking us closer to having real artificial intelligence. The problems are proposed as a benchmark for others to test their AIs against. The Witwatersrand team have already put existing state-of-the-art AI planning systems to the test. They weren’t actually that great at solving the problems and even the best could not complete the harder tasks.

So it is back to school for the AIs but hopefully now they will get a much better, flexible and fun education playing games like Minecraft. Let’s just hope the robots get to play with Lego too, so they don’t get left behind educationally.

More on …

Magazines …

EPSRC supports this blog through research grant EP/W033615/1,