by Rafael Pérez y Pérez of the Universidad Autónoma Metropolitana, México

A main goal of computational creativity research is to help us better understand how this essential human characteristic, creativity, works. Creativity is a very complex phenomenon that we only just understand: we need to employ all the tools that we have available to fully comprehend it. Computers are a powerful tool that can help us generate that knowledge and reflect on it. By building computer models of the processes we think are behind creativity, we can start to probe how creativity really works.

When you hear someone claiming that a computer agent, whether program, robot or gadget, is creative, the first question you should ask is: what have we learned? What does studying this agent help us to realise or discover about creativity that we did not know before? If you do not get a satisfactory answer, I would hardly call it a computer model of creativity. As well as being able to generate novel, and interesting or useful, things, a creative agent ought to fulfil other criteria: using its knowledge, creating knowledge and evaluating its own work.

Be knowledgeable!

Truly creative agents should draw on their own knowledge to build the things, such as art, that they create. They should use a knowledge-base, not just create things randomly. We aren’t, for example, interested in programs that arbitrarily pick a picture from the web, randomly apply a filter to it and then claim they have generated art.

Create knowledge!

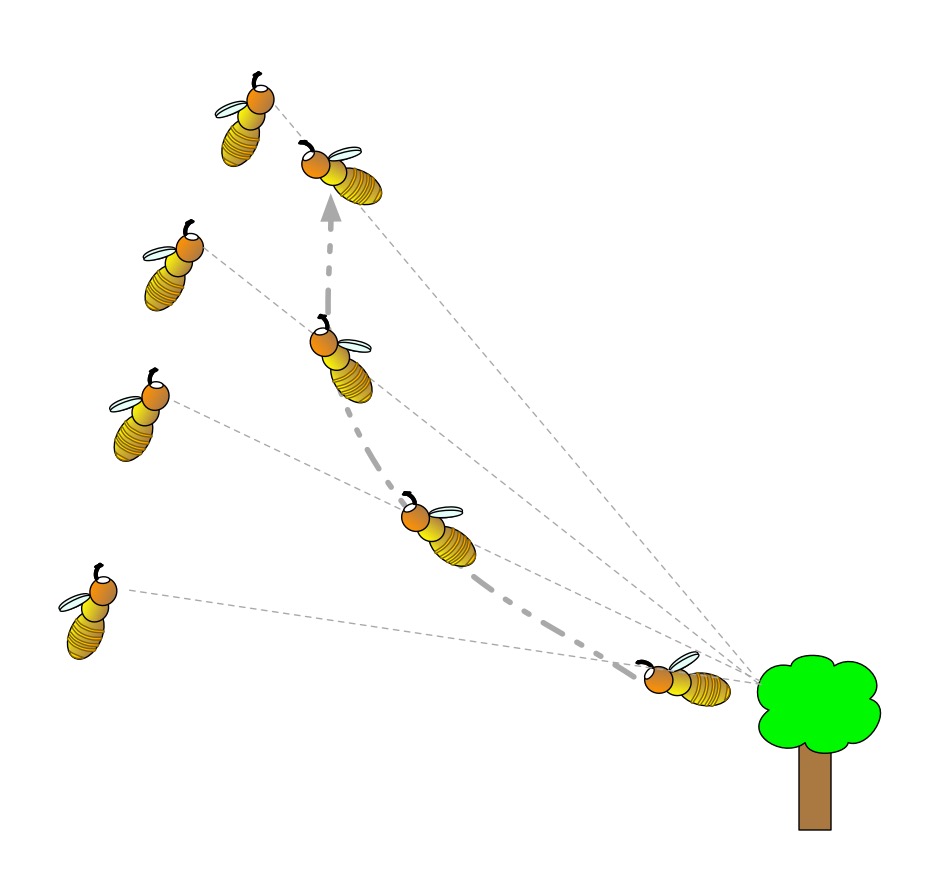

A creative agent must be able to interpret its own creations in order to generate novel knowledge, and that knowledge should help it produce more original pieces. For example, a program that generates story plots must be able to read its own stories and learn from them, as well as from stories developed by others.

Evaluate it!

To deserve to be called creative, an agent also ought to be able to tell whether the things it has created are good or bad. It should be able to evaluate its work, as well as that produced by similar agents. It’s evaluation should also influence the way the generation process works. We don’t want joke creation programs that churn out thousands of ‘jokes’ leaving a human to decide which are actually funny. A creative agent ought to be able to do that itself!

Design me a design

At the moment few, if any, systems fulfil all these criteria. Nevertheless, I suggest they should be the main goals of those doing research in computational creativity. Over the past 20 years I’ve been studying computer models of creativity, aiming to do exactly that. My main research has focused on story generation, but with my team I’ve also developed programs that aim to create novel visual designs. This is the kind of thing someone developing new fabric, wallpaper or tiling patterns might do, for example. With Iván Guerrero and María González I developed a program called TLAHCUILO. It composes visual patterns based on photographs or an empty canvas. It employs geometrical patterns, like repeated shapes, in the picture and then uses them as the basis of a new abstract pattern.

The word “tlahcuilo” refers to painters and writers

in ancient México responsible for preserving

the knowledge and traditions of their people.

To build the system’s knowledge-base, we created a tool that human designers can use to do the same creative task. TLAHCUILO analyses the steps they follow as they develop a composition and registers what it has learnt in its knowledge base. For example, it might note the way the human designer adds elements to make the pattern symmetrical or to add balance. Once these approaches are in its knowledge base it can use them itself in its own compositions. This is a little like the way an apprentice to a craftsman might work, watching the Master at work, gradually building the experience to do it themselves. Our agent similarly builds on this experience to produce its own original outputs. It can also add its own pieces of work to its knowledge-base. Finally, it is able to assess the quality of its designs. It aims to meet the criteria set out above.

Design me a plot

One of TLAHCUILO’s most interesting characteristics is that it uses the same model of creativity that we used to implement MEXICA, our story plot generator (see CS4FN Issue 18). This allows us to compare in detail the differences and similarities between an agent that produces short-stories and an agent that produces visual compositions. We hope this will allow us to generalise our understanding.

Creativity research is a fascinating field. We hope to learn not just how to build creative agents but more importantly to understand what it takes to be a creative human.

More on …

Related Magazines …

EPSRC supports this blog through research grant EP/W033615/1.