In an age of satellite navigation when all ships have high-tech navigation systems that can tell them exactly where they are to the metre, on accurate charts that show exactly where dangers lurk, why do we still bother to keep any working lighthouses?

Lighthouses were built around the Mediterranean from the earliest times, originally to help guide ships into ports rather than protect them from dangerous rocks or currents. The most famous ancient lighthouse was the great lighthouse of Pharos, at the entry to the port of Alexandria. Built by the Ancient Egyptians, it was one of the seven wonders of the ancient world.

In the UK Trinity House, the charitable trust that still runs all our lighthouses, was set up in Tudor Times by Henry VIII, originally to provide warnings for shipping in the Thames. The first offshore lighthouse built to protect shipping from dangerous rocks was built on Eddystone at the end of the 17th century, It only survived for 5 years, before it was washed away in a storm itself, along, sadly, with Henry Winstanley who built it. However, in the centuries since then Trinity House, has repeatedly improved the design of their lighthouses, turning them into a highly reliable warning system that has saved countless lives, across the centuries.

There are still several hundred lighthouses round the UK with over 60 still maintained by Trinity House. Each has a unique code spelled out in its flashing light that communicates to ships exactly where it is, and so what danger awaits them. But why are they still needed at all? They cost a lot of money to maintain, and the UK government doesn’t fund them. It is all done on donations and money they can raise. So why not just power them down and turn them into museums? Instead their old lamps have been modernised and upgraded with powerful LED lights, automated and networked. They switch on automatically based on light sensors, sounding foghorns automatically too. If the LED light fails, a second automatically switches on in its place, and the control centre, now hundreds of miles away is alerted. There are no plans to turn them all off and just leave shipping to look after itself. The reason is a lesson that we could learn from in many other areas where computer technology is replacing “old-fashioned” ways of doing things.

Yes, satellite navigation is a wonderful system that is a massive step forward for navigation. However, the problem is that it is not completely reliable for several reasons. GPS, for example, is a US system, developed originally for the military and ultimately they retain control. They can switch the public version off at any time, and will if they think it is in their interests to do so. Elon Musk switched off his Starlink system, which he aims to be a successor to GPS, to prevent Ukraine from using it in the war with Russia. It was done in the middle of a Ukranian military operation causing that operation to fail. In July 2025, the Starlink system also demonstrated it is not totally reliable anyway, as it went down for several hours showing that satellite navigation systems can fail for periods, even if not switched off intentionally, due to software bugs or other system issues. A third problem is that navigation systems can be intentionally jammed whether as an act of war or terrorism, or just high-tech vandalism. Finally, a more everyday problem is that people are over trusting of computer systems and they can give a false sense of security. Satellite navigation gives unprecedented accuracy and so are trusted to work to finer tolerances than people would without them. As a result it has been noticed that ships now often travel closer to dangerous rocks than they used to. However, the sea is capricious and treacherous. Sail too close to the rocks in a storm and you could suddenly find yourself tossed upon them, the back of your ship broken, just as has happened repeatedly through history.

Physical lighthouses may be old technology but they work as a very visible and dependable warning system, day or night. They can be used in parallel to satellite navigation, the red and white towers and powerful lights very clearly say: “there is danger here … be extra careful!” That extra very physical warning of the physical danger is worth having as a reminder not to take risks.The lighthouses are also still there, adding in redundancy, should the modern navigation systems go down just when a ship needs them, with nothing extra needing to be done, and so no delay.

It is not out of some sense of nostalgia that the lighthouses still work. Updated with modern technology of their own, they are still saving lives.

– Paul Curzon, Queen Mary University of London

More on …

- Gladys West: where is my satellite?

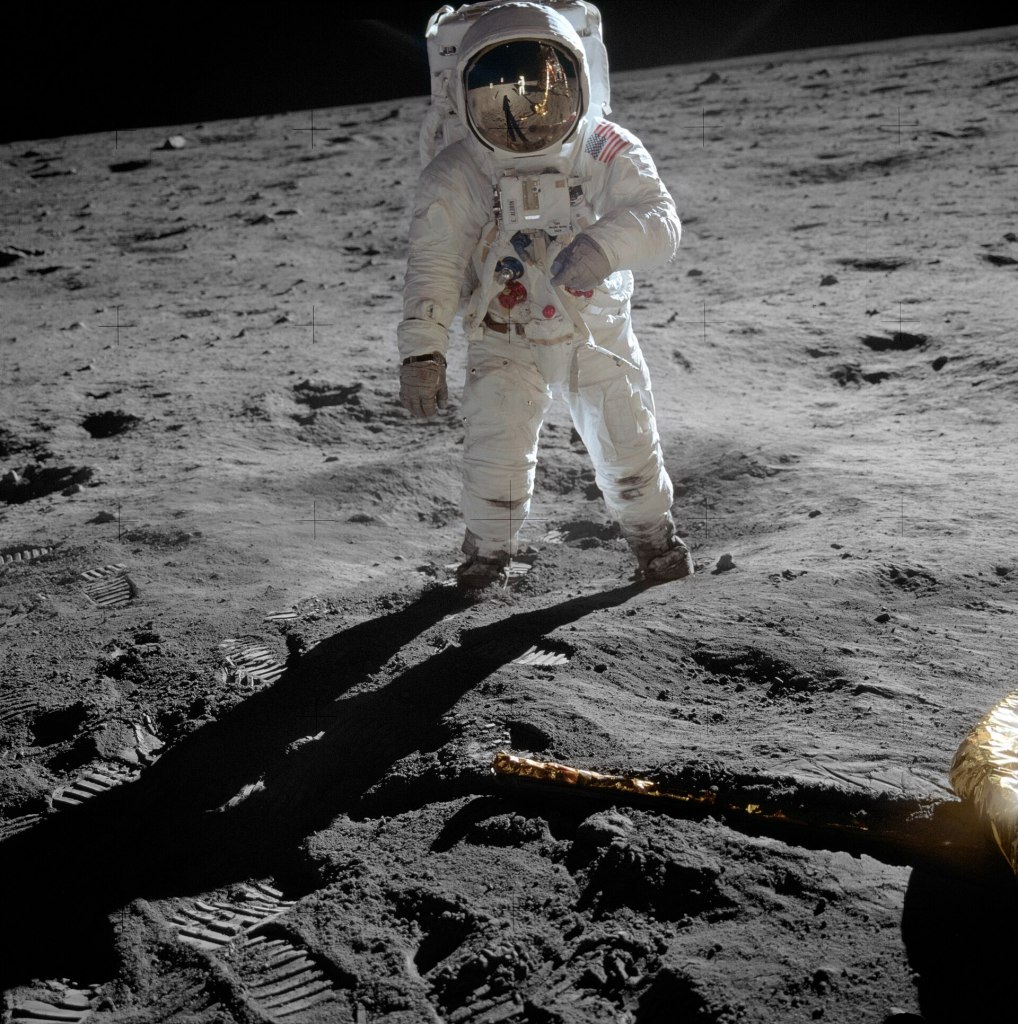

- Apollo Emergency! Take a deep breath, hold your nerve and count to 5

- Climb Portland Bill Lighthouse [EXTERNAL]

Subscribe to be notified whenever we publish a new post to the CS4FN blog.

This blog is funded by EPSRC on research agreement EP/W033615/1.