by Paul Curzon, Queen Mary University of London

Mary Clem was a pioneer of dependable computing long before the first computers existed. She was a computer herself, but became more like a programmer.

Back before there were computers there were human computers: people who did the calculations that machines now do. Victorian inventor, Charles Babbage, worked as one. It was the inspiration for him to try to build a steam-powered computer. Often, however, it was women who worked as human computers especially in the first half of the 20th century. One was Mary Clem in the 1930s. She worked for Iowa State University’s statistical lab. Despite having no mathematical training and finding maths difficult at school, she found the work fascinating and rose to become the Chief Statistical Clerk. Along the way she devised a simple way to make sure her team didn’t make mistakes.

The start of stats

Big Data, the idea of processing lots of data to turn that data into useful information, is all the rage now, but its origins lie at the start of the 20th century, driven by human computers using early calculating machines. The 1920s marked the birth of statistics as a practical mathematical science. A key idea was that of calculating whether there were correlations between different data sets such as rainfall and crop growth, or holding agricultural fairs and improved farm output. Correlation is the the first step to working out what causes what. it allows scientists to make progress in working out how the world works, and that can then be turned into improved profits by business, or into positive change by governments. It became big business between the wars, with lots of work for statistical labs.

Calculations and cards

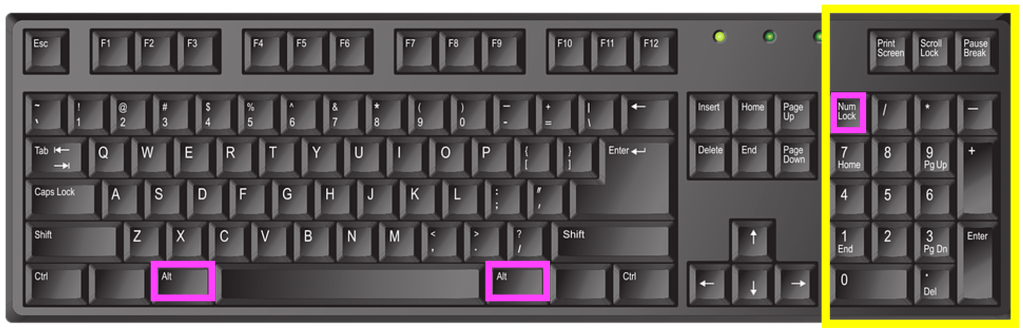

Originally, in and before the 19th century, human computers did all the calculations by hand. Then simple calculating machines were invented, so could be used by the human computers to do the basic calculations needed. In 1890 Herman Hollerith invented his Tabulator machine (his company later became computing powerhouse, IBM). The Tabulator machine was originally just a counting machine created for the US census, though later versions could do arithmetic too. The human computers started to use them in their work. The tabulator worked using punch cards, cards that held data in patterns of holes punched in to them. A card representing a person in the census might have a hole punched in one place if they were male, and in a different place if they were female. Then you could count the total number of any property of a person by counting the appropriate holes.

Mary was being more than a computer,

and becoming more like a programmer

Mary’s job ultimately didn’t just involve doing calculations but also involved preparing punch cards for input into the machines (so representing data as different holes on a card). She also had to develop the formulae needed for doing calculations about different tasks. Essentially she was creating simple algorithms for the human computers using the machines to follow, including preparing their input. Her work was therefore moving closer to that of a computer operator and then programmer’s job.

Zero check

She was also responsible for checking calculations to make sure mistakes were not being made in the calculations. If the calculations were wrong the results were worse than useless. Human computers could easily make mistakes in calculations, but even with machines doing calculations it was also possible for the formulae to be wrong or mistakes to be made preparing the punch cards. Today we call this kind of checking of the correctness of programs verification and validation. Since accuracy mattered, this part of he job also mattered. Even today professional programming teams spend far more time checking their code and testing it than writing it.

Mary took the role of checking for mistakes very seriously, and like any modern computational thinker, started to work out better ways of doing it that was more likely to catch mistakes. She was a pioneer in the area of dependable computing. What she came up with was what she called the Zero Check. She realised that the best way to check for mistakes was to do more calculations. For the calculations she was responsible for, she noticed that it was possible to devise an extra calculation, whereby if the other answers (the ones actually needed) have been correctly calculated then the answer to this new calculation is 0. This meant, instead of checking lots of individual calculations with different answers (which is slow and in itself error prone), she could just do this extra calculation. Then, if the answer was not zero she had found a mistake.

A trivial version of this general idea when you are doing a single calculation is to just do it a second time, but in a different way. Rather than checking manually if answers are the same, though, if you have a computer it can subtract the two answers. If there are no mistakes, the answer to this extra check calculation should be 0. All you have to do is to look for zero answers to the extra subtractions. If you are checking lots of answers then, spotting zeros amongst non-zeros is easier for a human than looking for two numbers being the same.

Defensive Programming

This idea of doing extra calculations to help detect errors is a part of defensive programming. Programmers add in extra checking code or “assertions” to their programs to check that values calculated at different points in the program meet expected properties automatically. If they don’t then the program itself can do something about it (issue a warning, or apply a recovery procedure, for example).

A similar idea is also used now to catch errors whenever data is sent over networks. An extra calculation is done on the 1s and 0s being sent and the answer is added on to the end of the message. When the data is received a similar calculation is performed with the answer indicating if the data has been corrupted in transmission.

A pioneering human computer

Mary Clem was a pioneer as a human computer, realising there could be more to the job than just doing computations. She realised that what mattered was that those computations were correct. Charles Babbages answer to the problem was to try to build a computing machine. Mary’s was to think about how to validate the computation done (whether by a human or a machine).

More on …

Related Magazines …

EPSRC supports this blog through research grant EP/W033615/1.