Iain M Banks’s science fiction novels about ‘The Culture’ imagine a universe inhabited (and largely run) by ‘Minds’. These are incredibly intelligent machines – mainly spaceships – that are also independently thinking conscious beings with their own personalities. From the replicants in Blade Runner and robots in Star Wars to Iain M Banks’s Minds, science fiction is full of intelligent machines. Could we ever really create a machine with a mind: not just a computer that computes, one that really thinks? Philosophers have been arguing about it for centuries. Things came to a head when philosopher John Searle came up with a thought experiment called the ‘Chinese room’. He claims it gives a cast iron argument that programmed ‘Minds’ can never exist. Are the computer scientists who are trying to build real artificial intelligences wasting their time? Or could zombies lurch to the rescue?

The Shaolin warrior monk

Imagine that the galaxy is populated by an advanced civilisation that has solved the problem of creating artificial intelligence programs. Wanting to observe us more closely they build a replicant that looks, dresses and moves just like a Shaolin warrior monk (it has to protect itself and the aliens watch too much TV!) They create a program for it that encodes the rules of Chinese. The machine is dispatched to Earth. Claiming to have taken a vow of silence, it does not speak (the aliens weren’t hot on accents). It reads Chinese characters written by the earthlings, then follows the instructions in its Chinese program that tell it the Chinese characters to write in response. It duly has written conversations with all the earthlings it meets as it wanders the planet, leaving them all in no doubt that they have been conversing with a real human Chinese speaker.

The question is, is that machine monk really a Mind? Does it really understand Chinese or is it just simulating that ability?

The Chinese room

Searle answers this by imagining a room in which a human sits. She speaks no Chinese but instead has a book of rules – the aliens’ computer program written out in English. People pass in Chinese symbols through a slot. She looks them up in the book and it tells her the Chinese symbols to pass back out. As she doesn’t understand Chinese she has no idea what the symbols coming in or going out mean. She is just uncomprehendingly following the book. Yet to the outside world she seems to be just as much a native speaker as that machine monk. She is simulating the ability to understand Chinese. As she’s using the same program as the monk, doing exactly what it would do, it follows that the machine monk is also just simulating intelligence. Therefore programs cannot understand. They cannot have a mind.

Is that machine monk a Mind?

Searle’s argument is built on some assumptions. Programs are ‘syntactic devices’: that just means they move symbols around, swapping them for others. They do it without giving those symbols any meaning. A human mind on the other hand works with ‘semantics’ – the meanings of symbols not just the symbols themselves. We understand what the symbols mean. The Chinese room is supposed to show you can’t get meaning by pushing symbols around. As any future artificial intelligence will be based on programs pushing symbols around they will not be a Mind that understands what it is doing.

The zombies are coming

So is this argument really cast iron? It has generated lots of debate, virtually all of it aiming to prove Searle wrong. The counter-arguments are varied and even the zombies have piled in to fight the cause: philosophical ones at least. What is a philosophical zombie? It’s just a human with no consciousness, no mind. One way to attack Searle’s argument is to attack the assumptions. That’s what the zombies are there to do. If the assumptions aren’t actually true then the argument falls apart. According to Searle human brains do something more than push symbols about\; they have a way of working with meaning. However, there can’t be a way of telling that by talking to one as otherwise it could have been used to tell that the machine monk wasn’t a mind.

Imagine then, there has been a nuclear accident and lots of babies are born with a genetic mutation that makes them zombies. They have no mind so no ability to understand meaning. Despite that they act exactly like humans: so much so that there is no way to tell zombies and humans apart. The zombies grow up, marry and have zombie children.

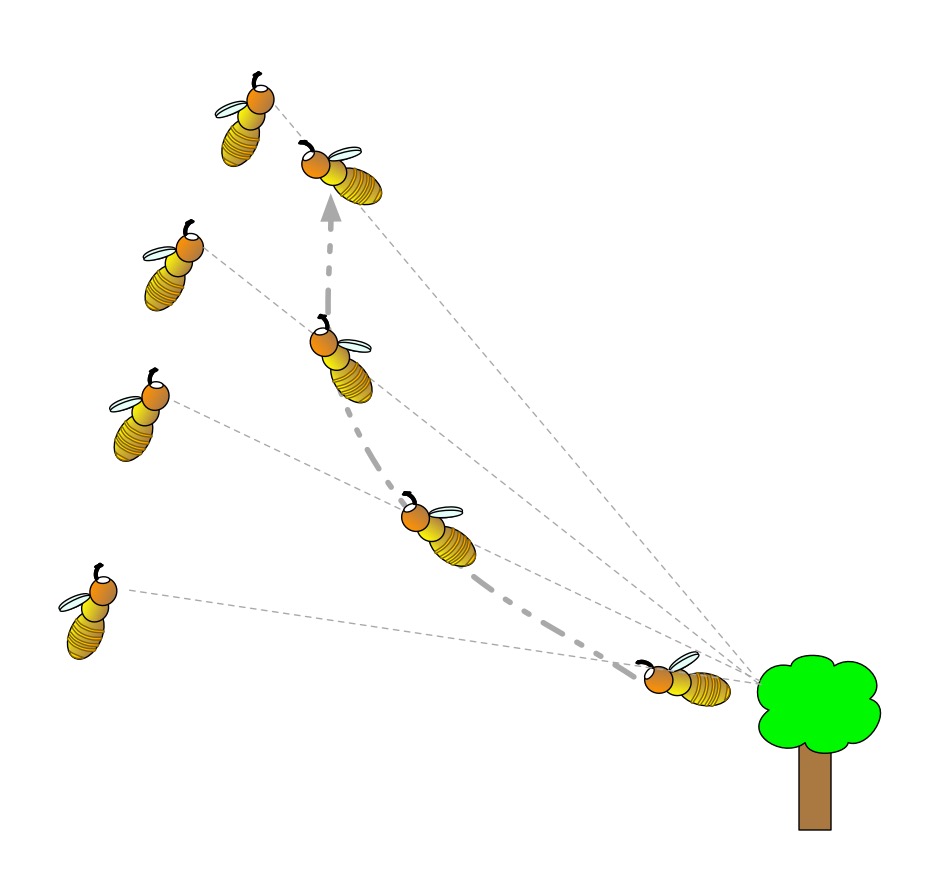

Presumably zombie brains are simpler than human ones – they don’t have whatever complication it is that introduces minds. Being simpler they have a fitness advantage that will allow them to out-compete humans. They won’t need to roam the streets killing humans to take over the world. If they wait long enough and keep having children, natural selection will do it for them.

The zombies are here

The point is it could have already happened. We could all be zombies but just don’t know it. We think we are conscious but that could just be an illusion – another simulation. We have no way to prove we are not zombies and if we could be zombies then Searle’s assumption that we are different to machines may not be true. The Chinese room argument falls apart.

Does it matter?

The arguments and counter arguments continue. To an engineer trying to build an artificial intelligence this actually doesn’t matter. Whether you have built a Mind or just something that exactly simulates one makes no practical difference. It makes a big difference to philosophers, though, and to our understanding of what it means to be human.

Let’s leave the last word to Alan Turing. He pointed out 30 years before the Chinese room was invented that it’s generally considered polite to assume that other humans are Minds like us (not zombies). If we do end up with machine intelligences so good we can’t tell they aren’t human, it would be polite to extend the assumption to them too. That would surely be the only humane thing to do.

Paul Curzon, Queen Mary University of London (from the cs4fn archive)

More on …

Related Magazines …

Subscribe to be notified whenever we publish a new post to the CS4FN blog.

This blog is funded by EPSRC on research agreement EP/W033615/1.