The problems of recognising faces

by Jo Brodie and Paul Curzon, Queen Mary University of London

How face recognition technology caused the wrong Black man to be arrested.

The police were waiting for Robert Williams when he returned home from work in Detroit, Michigan. They arrested him for robbery in front of his wife and terrified daughters aged two and five and took him to a detention centre where he was kept overnight. During his interview an officer showed him two grainy CCTV photos of a suspect alongside a photo of Williams from his driving licence. All the photos showed a large Black man, but that’s where the similarity ended – it wasn’t Williams on CCTV but a completely different man. Williams held up the photos to his face and said “I hope you don’t think all Black people look alike”, the officer replied that “the computer must have got it wrong.”

William’s problems began several months before his arrest when video clips and images of the robbery from the CCTV camera were run through face recognition software used by the Detroit Police Department. The system has access to the photos from everyone’s driving licence and can compare different faces until it finds a potential match and in this case it falsely identified Robert Williams. No system is ever perfect but studies have shown that face recognition technology is often better at correctly matching lighter skinned faces than darker skinned ones.

Check the signature

The way face recognition works is not actually by comparing pictures but by comparing data. When a picture of a face is added to the system, essentially lots of measurements are taken such as how far apart the eyes are, or what the shape of the nose is. This gives a signature for each face made up of all the numbers. That signature is added to the database. When looking for a match from say a CCTV image, the signature of the new image is first determined. Then algorithms look for the signature in the database “nearest” to the new one. How well it works depends on the particular features chosen, amongst many other things. If the features chosen are a poor way to distinguish particular groups of people then there will be lots of bad matches. But how does it decide what is “nearest” anyway given in essence it is just comparing groups of numbers? Many algorithms are based on machine learning. The system might be trained on lots of faces and told which match and which don’t, allowing it to look for patterns that are good ways to predict matches. If, however, it is trained on mainly light skinned faces it is likely to be bad at spotting matches for faces of other ethnic backgrounds. It may actually decide that “all black people look alike”.

Biasing the investigation

However, face recognition is only part of the story. A potential match is only a pointer towards someone who might be a suspect and it’s certainly not a ‘case closed’ conclusion – there’s still work to be done to check and confirm. But as Williams’ lawyer, Victoria Burton-Harris, pointed out once the computer had suggested Williams as a suspect that “framed and informed everything that officers did subsequently”. The man in the CCTV image wore a red baseball cap. It was for a team that Williams didn’t support (he’s not even a baseball fan) but no-one asked him about it. They also didn’t ask if he was in the area at the time (he wasn’t) or had an alibi (he did). Instead the investigators asked a security guard at the shop where the theft took place to look at some photos of possible suspects and he picked Williams from the line-up of images. Unfortunately the guard hadn’t been on duty on the day of the theft and had only seen the CCTV footage.

Robert Williams spent 30 hours in custody for a crime he didn’t commit after his face was mistakenly selected from a database. He was eventually released and the case dropped but his arrest is still on record along with his ‘mugshot’, fingerprints and a DNA sample. In other words he was wrongly picked from one database and has now been unfairly added to another. The experience for his whole family has been very traumatic and sadly his children’s first encounter with the police has been a distressing rather than a helpful one.

Remove the links

The American Civil Liberties Union (ACLU) has filed a lawsuit against the Detroit Police Department on Williams’ behalf for his wrongful arrest. It is not known how many people have been arrested because of face recognition technology but given how widely it is used it’s likely that others will have been misidentified too. The ACLU and Williams have asked for a public apology, for his police record to be cleared and for his images to be removed from any face recognition database. They have also asked that the Detroit Police Department stop using face recognition in their investigations. If Robert Williams had lived in New Hampshire he’d never have been arrested as there is a law there which prevents face recognition software from being linked with driving license databases.

In June 2020 Amazon, Microsoft and IBM denied the police any further access to their face recognition technology and IBM has also said that it will no longer work in this area because of concerns about racial profiling (targeting a person based on assumptions about their race instead of their individual actions) and violation of privacy and human rights. Campaigners are asking for a new law that protects people if this technology is used in future. But the ACLU and Robert Williams are asking for people to just stop using it – “I don’t want my daughters’ faces to be part of some government database. I don’t want cops showing up at their door because they were recorded at a protest the government didn’t like.”

Technology is only as good as the data and the algorithms it is based on. However, that isn’t the whole story. Even if very accurate, it is only as good as the way it is used. If as a society we want to protect people from bad things happening, perhaps some technologies should not be used at all.

More on

Related Magazines …

An earlier version of this article was originally published on the Teaching London Computing website where you can find references and further reading.

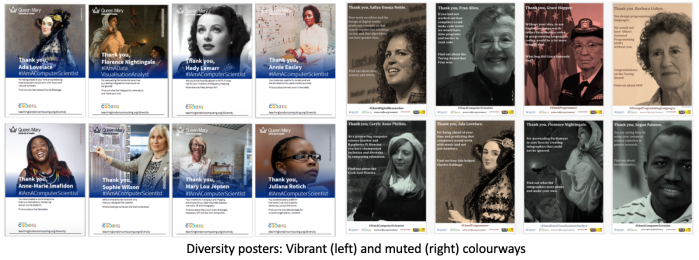

One of the aims of our Diversity in Computing posters (see below) is to help a classroom of young people see the range of computer scientists which includes people who look like them and people who don’t look like them. You can download our posters free from the link below.

See more in ‘Celebrating Diversity in Computing‘

We have free posters to download and some information about the different people who’ve helped make modern computing what it is today.

Or click here: Celebrating diversity in computing

EPSRC supports this blog through research grant EP/W033615/1.

One thought on “Facing up to ALL faces”