Welcome to Day 7 of our advent calendar. Yesterday’s post was about Printed Circuit Birds Boards, today’s theme is the Christmas robin redbreast which features on lots of Christmas cards and today is making a special appearance on our CS4FN Computing advent calendar.

In this longer post we’ll focus on the ways computer scientists are learning about our feathered friends and we’ll also make room for some of the bird-brained April Fools jokes in computing too.

We hope you enjoy it, and there’s also a puzzle at the end.

1. Computing Sounds Wild – bird is the word

Our free CS4FN magazine, Computing Sounds Wild (you can download a copy here), features the word ”bird” 60 times so it’s definitely very bird-themed.

“An interest in nature and an interest in computers don’t obviously go well together. For a band of computer scientists interested in sound they very much do, though. In this issue we explore the work of scientists and engineers using computers to understand, identify and recreate wild sounds, especially those of birds. We see how sophisticated algorithms that allow machines to learn, can help recognize birds even when they can’t be seen, so helping conservation efforts. We see how computer models help biologists understand animal behaviour, and we look at how electronic and computer-generated sounds, having changed music, are now set to change the soundscapes of films. Making electronic sounds is also a great, fun way to become a computer scientist and learn to program.”

2. Singing bird – a human choir singing birdsong

by Jane Waite, QMUL

This article was originally published on the CS4FN website and can also be found on page 15 in the magazine linked above.

“I’m in a choir”. “Really, what do you sing?” “I did a blackbird last week, but I think I’m going to be woodpecker today, I do like a robin though!”

This is no joke! Marcus Coates a British artist, got up very early, and working with a wildlife sound recordist, Geoff Sample, he used 14 microphones to record the dawn chorus over lots of chilly mornings. They slowed the sounds down and matched up each species of bird with different types of human voices. Next they created a film of 19 people making bird song, each person sang a different bird, in their own habitats, a car, a shed even a lady in the bath! The 19 tracks are played together to make the dawn chorus. See it on YouTube below.

Marcus didn’t stop there, he wrote a new bird song score. Yes, for people to sing a new top ten bird hit, but they have to do it very slowly. People sing ‘bird’ about 20 times slower than birds sing ‘bird’ ‘whooooooop’, ‘whooooooop’, ‘tweeeeet’. For a special performance, a choir learned the new song, a new dawn chorus, they sang the slowed down version live, which was recorded, speeded back up and played to the audience, I was there! It was amazing! A human performance, became a minute of tweeting joy. Close your eyes and ‘whoop’ you were in the woods, at the crack of dawn!

Computationally thinking a performance

Computational thinking is at the heart of the way computer scientists solve problems. Marcus Coates, doesn’t claim to be a computer scientist, he is an artist who looks for ways to see how people are like other animals. But we can get an idea of what computational thinking is all about by looking at how he created his sounds. Firstly, he and wildlife sound recordist, Geoff Sample, had to focus on the individual bird sounds in the original recordings, ignore detail they didn’t need, doing abstraction, listening for each bird, working out what aspects of bird sound was important. They looked for patterns isolating each voice, sometimes the bird’s performance was messy and they could not hear particular species clearly, so they were constantly checking for quality. For each bird, they listened and listened until they found just the right ‘slow it down’ speed. Different birds needed different speeds for people to be able to mimic and different kinds of human voices suited each bird type: attention to detail mattered enormously. They had to check the results carefully, evaluating, making sure each really did sound like the appropriate bird and all fitted together into the Dawn Chorus soundscape. They also had to create a bird language, another abstraction, a score as track notes, and that is just an algorithm for making sounds!

3. Sophisticated songbird singing – how do they do it?

by Dan Stowell, QMUL

This article was originally published on the CS4FN website and can also be found on page 14 in the magazine linked above.

How do songbirds make such complex sounds? The answer is on a different branch of the tree of evolution…

We humans have a set of vocal folds (or vocal cords) in our throats, and they vibrate when we speak to make the pitched sound. Air from your lungs passes over them and they chop up the column of air letting more or less through and so making sound waves. This vocal ‘equipment’ is similar in mammals like monkeys and dogs, our evolutionary neighbours. But songbirds are not so similar to us. They make sounds too, but they evolved this skill separately, and so their ‘equipment’ is different: they actually have two sets of vocal folds, one for each lung.

Sometimes if you hear an impressive, complex sound from a bird, it’s because the bird is actually using the two sides of their voice-box together to make what seems like a single extra-long or extra-fancy sound. Songbirds also have very strong muscles in their throat that help them change the sound extremely quickly. Biologists believe that these skills evolved so that the birds could tell potential mates and rivals how healthy and skillful they were.

So if you ever wondered why you can’t quite sing like a blackbird, now you have a good excuse!

4. Data transmitted on the wing

Computers are great ways of moving data from one place to another and the internet can let you download or share a file very quickly. Before I had the internet at home if I wanted to work on a file on my home computer I had to save a copy from my work computer onto a memory stick and plug it in to my laptop at home. Once I ‘got connected’ at home I was then able to email myself with an attachment and use my home broadband to pick up file. Now I don’t even need to do that. I can save a file on my work computer, it synchronises with the ‘cloud’ and when I get home I can pick up where I left off. When I was using the memory stick my rate of data transfer was entirely down to the speed of road traffic as I sat on the bus on the way to work. Fairly slow, but the data definitely arrived in one piece.

In 1990 a joke memo was published for April Fool’s Day which suggested the use of homing pigeons as a form of internet, in which the birds might carry small packets of data. The memo, called ‘IP over Avian Carriers’ (that is, a bird-based internet), was written in a mock-serious tone (you can read it here) but although it was written for fun the idea has actually been used in real life too. Photographers in remote areas with minimal internet signal have used homing pigeons to send their pictures back.

A company in the US which offers adventure holidays including rafting used homing pigeons to return rolls of films (before digital film took over) back to the company’s base. The guides and their guests would take loads of photos while having fun rafting on the river and the birds would speed the photos back to the base, where they could be developed, so that when the adventurous guests arrived later their photos were ready for them.

Further reading

Pigeons keep quirky Poudre River rafting tradition afloat (17 July 2017) Coloradoan.

5. Serious fun with pigeons

On April Fool’s Day in 2002 Google ‘admitted’ to its users that the reason their web search results appeared so quickly and were so accurate was because, rather than using automated processes to grab the best result, Google was actually using a bank of pigeons to select the best results. Millions of pigeons viewing web pages and pecking picking the best one for you when you type in your search question. Pretty unlikely, right?

In a rather surprising non-April Fool twist some researchers decided to test out how well pigeons can distinguish different types of information in hospital photographs. They trained pigeons by getting them to view medical pictures of tissue samples taken from healthy people as well as pictures taken from people who were ill. The pigeons had to peck one of two coloured buttons and in doing so learned which pictures were of healthy tissue and which were diseased. If they pecked the correct button they got an extra food reward.

The researchers then tested the pigeons with a fresh set of pictures, to see if they could apply their learning to pictures they’d not seen before. Incredibly the pigeons were pretty good at separating the pictures into healthy and unhealthy, with an 80 per cent hit rate.

Further reading

Principle behind Google’s April Fools’ pigeon prank proves more than a joke (27 March 2019) The Conversation.

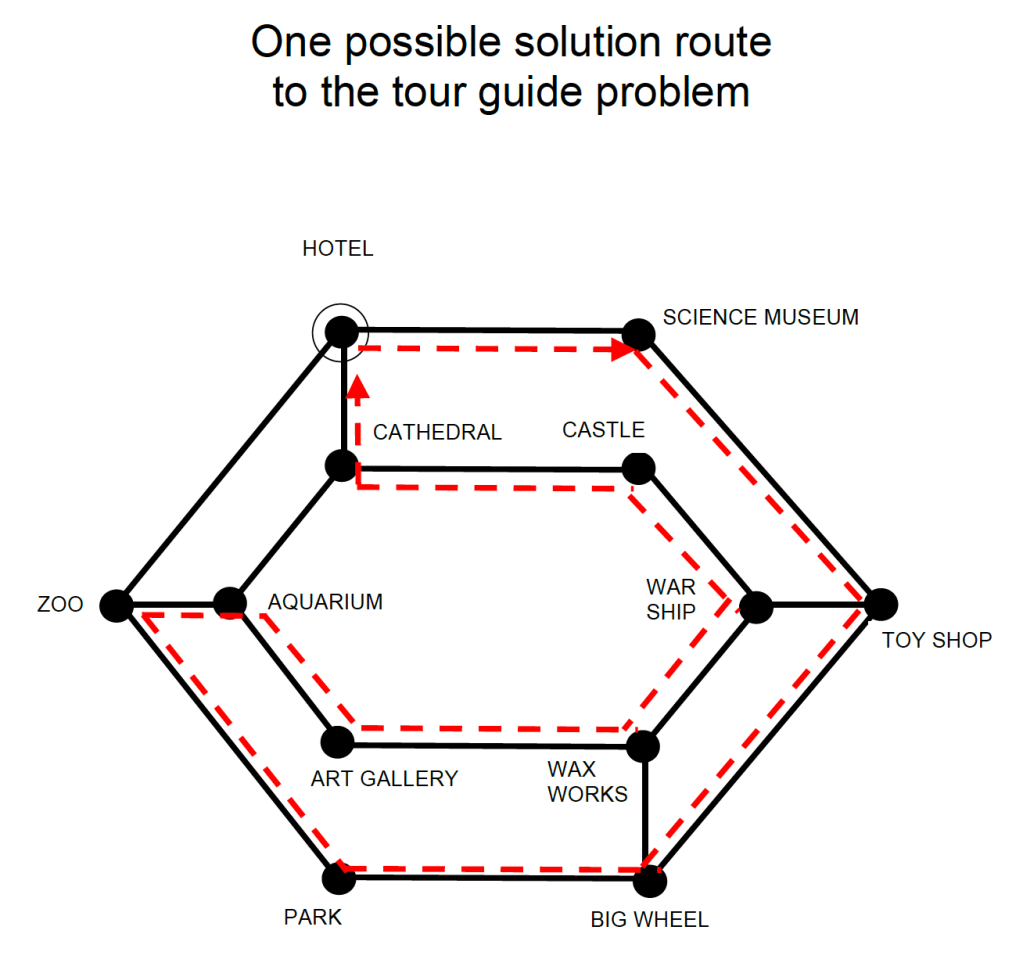

6. Today’s puzzle

You can download this as a PDF to PRINT or as an editable PDF that you can fill in on a COMPUTER.

You might wonder “What do these kriss-kross puzzles have to do with computing?” Well, you need to use a bit of logical thinking to fill one in and come up with a strategy. If there’s only one word of a particular length then it has to go in that space and can’t fit anywhere else. You’re then using pattern matching to decide which other words can fit in the spaces around it and which match the letters where they overlap. Younger children might just enjoy counting the letters and writing them out, or practising phonics or spelling.

We’ll post the answer tomorrow.

7. Answer to yesterday’s puzzle

The creation of this post was funded by UKRI, through grant EP/K040251/2 held by Professor Ursula Martin, and forms part of a broader project on the development and impact of computing.

EPSRC supports this blog through research grant EP/W033615/1.