Pseudocode poems are poems that work both as a poem and as an algorithm so can be read or executed. They incorporate sequencing, selection or repetition constructs and other kinds of statements to take actions. You can implement them as an actual program. Below are our attempts. Can you write better ones?

Poems often use the ambiguity in language and aim to affect emotions. Pseudocode is intended to be precise. Programs certainly are. They do something specific and have a single precise meaning. Writing pseudocode poems that do both can be a lot of fun: just like writing normal programs is. The idea was inspired by Bryan Bilston’s poem ‘Two Paths Diverged’. Read it here or buy his wonderful book of poems, ‘ You took the last bus home’.

I am not a great poet, but here are some of my attempts at pseudocode poems to at least give you the idea. They are, in turn, based on the core control structures of Sequencing, Selection and Repetition. They also use print statements and assignments to get things done,

Sequencing

This pseudocode poem is based on sequencing: doing things one after the other.

What am I when it’s all over?

I am dire.

I am fire.

I am alone.

I am stone.

I am old.

I am cold.

We use the verb TO BE to be the equivalent of assignment: setting the value of a variable. Here it is implemented as a Python program.

def whatamI(): """What am I when it's all over?""" I = 'dire' I = 'fire' I = 'alone' I = 'stone' I = 'old' I = 'cold' print(I)

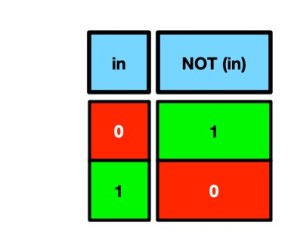

Selection

Here is a pseudocode poem based on selection, which is the second core control structure. It chooses between two option based on a boolean test: a true / false question. The question here is: do I love you? Dry run the algorithm or run program to find out.

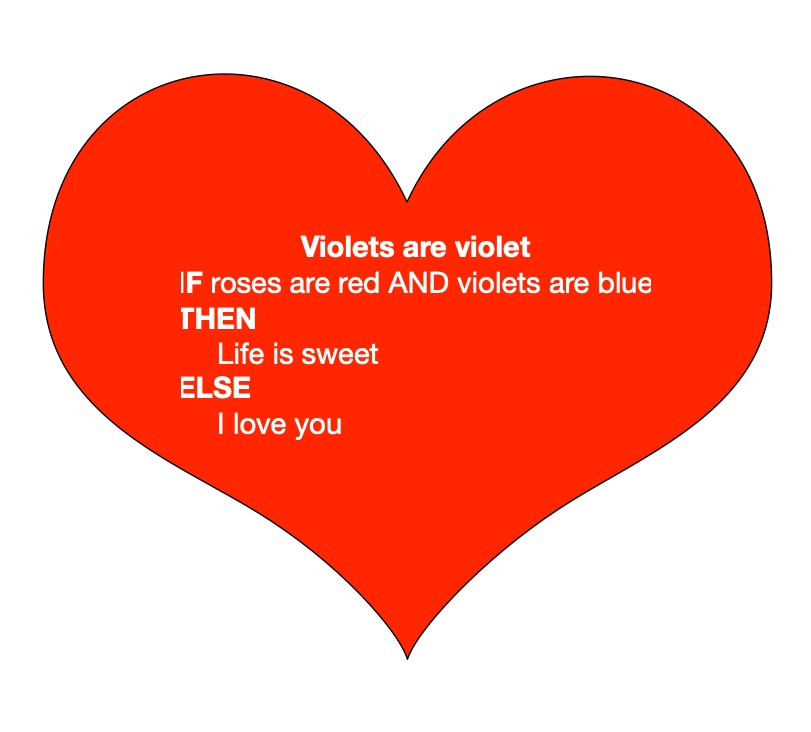

Violets are violet

if roses are red and violets are blue

then

Life is sweet

else

I love you

Here it is implemented as a Python program (with appropriate initialisation).

def violetsareviolet():

"""Violets are violet"""

roses = 'red'

violets = 'violet'

if roses == 'red' and violets == 'blue':

print('Life is sweet')

else:

print('I love you')

violetsareviolet()

Find out more about Selection based pseudocode programs via our computational literary criticism of Rudyard Kipling’s poem “If”.

Iteration or Repetition

The final kind of control structure is iteration (i.e., repetition). It is used to repeat the same lines over and over again.

Is this poem really long?

it is true

while it is true

this is short

it is endless

Here it is implemented as a Python program.

def isthispoemreallylong():

"""Is this poem really long?"""

it = True

while (it == True) :

this = 'short'

it = 'endless'

isthispoemreallylong()

Can you work out what it does as an algorithm/program, rather than as just a normal poem? You may need a version with print statements to understand its beauty.

def isthispoemreallylong2():

"""Is this poem really long?"""

it = True

while (it == True) :

this = 'short'

print("this is " + this)

it = 'endless'

print("it is " + it)

isthispoemreallylong2()

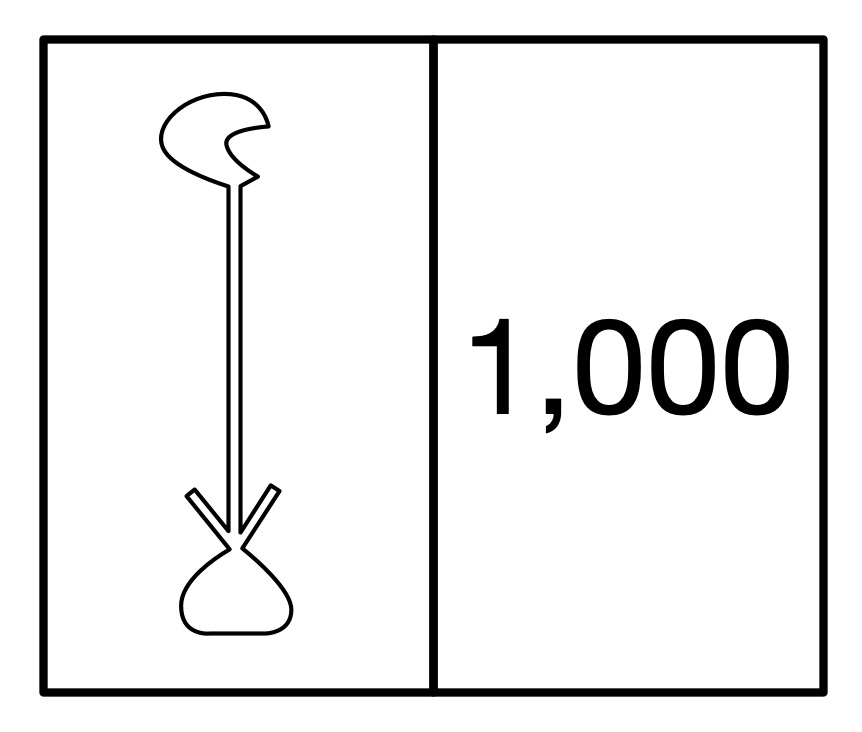

The word while indicates the start of a while loop. In the pseudocode, it is a command to repeat the following statement(s). It checks the boolean expression after the body each time. Only if that boolean expression is false does it stop. In this case the body sets the variable named this to string value ‘short’. The test is about a different variable though which is not changed inside the loop, so once in the loop there is nothing that will ever change the variable it so the value of the test will always be the same. Variable it will always be True and the loop will keep setting variable this to value ‘short’, over and over again. This means the loop is a non-terminating loop. It never exits so the lines of code after it are never executed. As a program they are never followed by the computer. The variable it is never set to the value ‘endless’.

Overall the poem is short in number of lines but it is actually endless if executed. It is the equivalent of a poem that once you start reading it you never get to the end:

it is true check it is true this is short check it is true this is short check it is true this is short ...

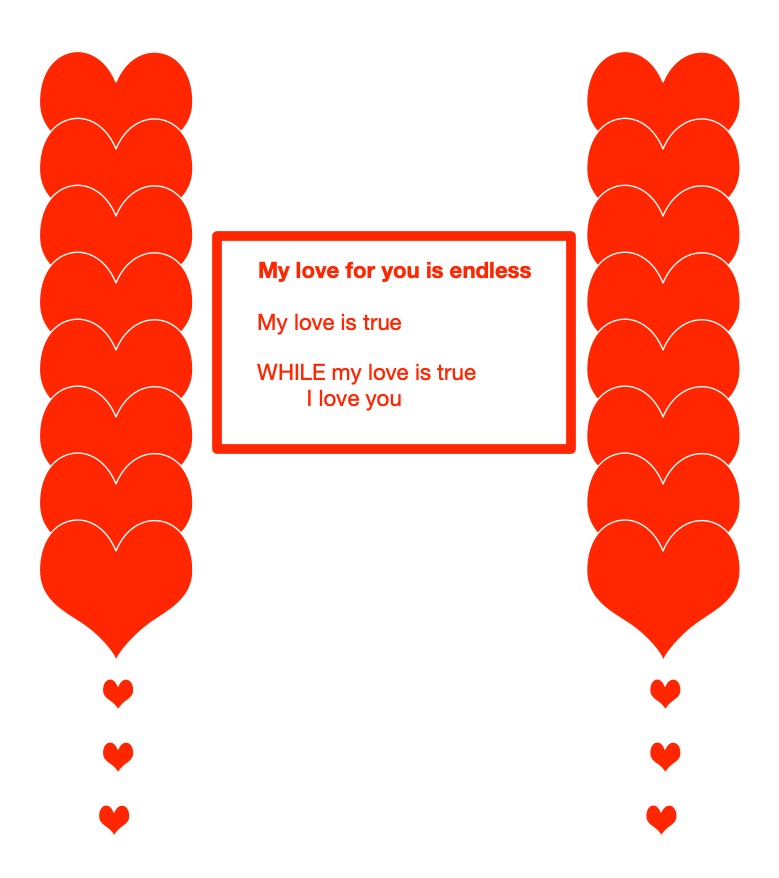

Here is a more romantically inclined poem for Valentine’s day (since at the time of writing, it is coming up) again using repetition

My love for you is endless my love is true while my love is true I love you

Here it is implemented as a Python program.

def myloveforyouisendless():

"""My love for you is endless"""

my_love = True

while my_love == True:

print('I love you')

myloveforyouisendless()

In the pseudocode of this poem the verb “to be” is used for two different purposes: as an assignment statement (it is true is used as a statement to mean it = True) and as a boolean expression (it is true is used as a boolean expression to mean it == True). As an assignment it is used as a command to do something. As an expression it is something representing a value: it evaluates to either true or false. Confusing these two English uses is a common mistake novices make programming, and shows one of the ways why programming languages are used with different symbols for different meanings, rather than natural languages.

Now it is your turn. Can you write a great poem that can also be executed?

Paul Curzon, Queen Mary University of London

More on …

- Poetry and Computer Science

- Computational literary criticism of Rudyard Kipling’s poem “If”

- Lego Computer Science: Sequence, Selection and Iteration

- Computer Science and Poetry ideas for teaching [Teaching London Computing]

Subscribe to be notified whenever we publish a new post to the CS4FN blog.

This blog is funded by EPSRC on research agreement EP/W033615/1.

Answers

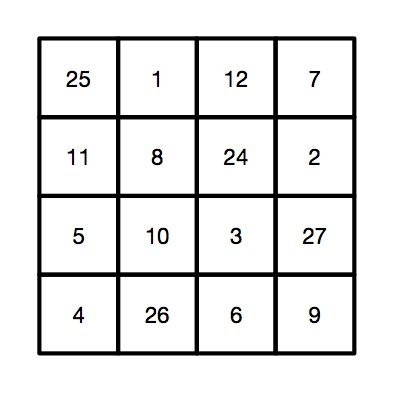

- The selection poem is about a volcano, but the answer to the question in the title is ‘cold’.

- Assuming you agree with me that violet flowers are violet (or at least not blue) then clearly we are compatible at least in being pedantic and you will find I love you.