Programming languages have lots of control structures, like if-statements and while loops, case-statements and for loops, method call and return, … but a famous research result in theoretical computer science (now called the structured program theorem) says you can do everything that it is possible to do with only three. All those alternatives are just for convenience. All you need is one way each to do sequence; one way to do selection, and one way to do iteration. If you have those you can build the structure needed to do any computation. In particular, you do not need a goto statement! We can explore what that means by just thinking about instructions to build Lego.

We have seen that you can think of a set of instructions to build Lego an algorithm. So do those instructions have everything needed to describe the structure of any computation?

What do we mean by a control structure? Just some mechanism to decide the order that instructions must be done for an algorithm to work. Part of what makes a set of instructions an algorithm is that the order of instructions are precisely specified. Follow the instructions in the right order and the algorithm is guaranteed to work.

Goto

First of all, Lego instructions are designed to be really clear and easy to follow. The nice folk at Lego want anyone to be able to follow them, even a small child and manage to accurately build exactly the model shown on the box.

What they do not make use of is a Goto instruction, an arbitrary jump to another place, where you are told, for example, to move to Page 14 for the next instruction. That kind of jump from place to place is reserved for Fighting Fantasy Adventure Books, where you choose the path through the story. They jump you about precisely because the aim is for you to be lost in the myriad of potential stories that are possible. You just don’t do that if you want instructions to be easy to follow.

The structured program theorem provided the ammunition for arguing that goto should not be used in programming and instead structured programming (hence the theorem’s name) should be used instead. All it actually does is show it is not needed though, not that its use is worse, though the argument was eventually won, with some exceptions. For programs to be human-readable and maintainable it is best that they use forms of structured programming, and avoid the spaghetti programming structures that goto leads to..

Sequencing

The main kind of control structure in a booklet of Lego instructions is instead sequencing. Instructions follow one after the other. This is indicated by the pages of the booklet. On each page though the instructions are split into boxes that are numbered. The boxes and numbers are the essential part of the control structure. You build the Lego model in the order of the numbered boxes. The numbering provides a sequencing control structure. Programming languages usually just use the order of instructions down a page to indicate sequencing, sometimes separated by punctuation (like a semi-colon), though early languages used this kind of numbering. The point is the same, however it is done, it is just a mechanism to make clear the order that the instructions are followed one after another, i.e., sequencing.

Parallelism and time-slicing

However, with lego there is another control structure within those boxes that is not quite sequencing. Each box normally has multiple pieces to place with the position of each shown. The lego algorithm isn’t specifying the order those pieces are placed (any order will do). This is a kind of non-deterministic sequencing control structure. It is similar to a parallelism control structure in programming languages, as if you like building your Lego model together with others, then a different person could choose each piece and all place the piece together (parallelism). Alternatively, they could place the pieces one after the other in some random order (time-slicing) and always end up with the same final result once the box is completed.

Is this necessary though? The structured program theorem says not, and in this case it is relatively easy to see that it isn’t. The people writing the instruction booklet could have decided an order themselves and enforced it. Which order they chose wouldn’t matter. Any Lego instruction booklet could be converted to one using only sequencing without parallelism or time-slicing.

Iteration

Iteration is just a way to repeat instructions or sub-programs. Lego instructions have a simplified form of repetition which is the equivalent of a simple for loop in programming. It just says that a particular box of instructions should be followed a fixed number of times (like 3 x meaning make this lego sub-build three times). With only this way of doing iteration lego instructions are not a totally general form of computation. There are algorithms that can’t be specified in Lego instructions. To be good enough to play the full role in the theorem, the iteration control structure has to have the capability to be unbounded. The decision to continue or not is made at the end of each iteration, You follow the instructions once, then decide if they should be followed again (and keep doing that). Having such a control structure would mean that at the point when you started to build the lego construct to be repeated, you would not necessarily know how many times that Lego construct was to be built. It’s possible to imagine Lego builds like this. For example, you might be building a fairytale castle made of modular turreted towers, where you can keep deciding whether to build another tower after each new tower is completed. until the castle is big enough. That would be an unbounded loop. An issue with unbounded loops is they could never terminate…you could be eternally damned to build Lego towers to eternity!

Selection

The final kind of control structure needed is selection. Selection involves having a choice of what instruction or subprogram to do next. This allows an algorithm to do different things depending on data input or the results of computation. As most lego sets involve building one specific thing, there isn’t much use of selection in Lego booklets.

However, some lego sets do have a simple form of selection. There are “3 in 1” sets where you can, for example, choose to make one of three animals by choosing one of three instruction booklets to follow at the start.

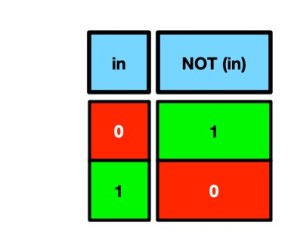

To be fully computationally general there would need to be choice possible at any point, in the way repetition can appear at any point in the booklet. It would need to be possible for any instruction or block of instructions to be prefigured by a test of whether they should be followed or not, with that test and arbitrary true/false question.

Again, such a thing is conceivable if more complex Lego builds were wanted. Building a fairytale castle you might include options to choose to build different kinds of turret on top of the towers, or choose different colours of bricks to make rainbow towers, or… If this kind of totally general choice was provided then no other kind of selection control structure would be needed. Having such instructions would provide a level of creativity between those of fixed sets to build one thing and the origianl idea of Lego as just blocks you could build anything from (the sets would need more bricks though!)

Sequence, Selection and Iteration is enough (but only if powerful enough)

So Lego instruction booklets do include the three kinds of control structure needed of sequence, selection and iteration. However, the versions used are not actually general enough for the structured control theorem to apply. Lego instructions with the control structures discussed are not powerful enough to be computationally complete, and describe all possible algorithms. More general forms are needed than found in normal Lego instructions to do that. In particular, a more general version of iteration is needed, as well as a verion of selection that can be used anywhere, and that includes a general purpose test. All programming languages have some powerful version of all three control structures. If they did not they could not be used as general purpose languages. There would be algorithms that could not be implemented in the language.

Just like programming languages, Lego instructions also use an extra kind of control structures that is not actually needed, It is there just for convenience, just like programming languages have lots of extra control structures just for convenience too.

Sadly then, Lego instructions, as found in the normal instruction booklets are not as general as a programming language. They do still provide a similar amount of fun though. Now, I must get back to building Notre Dame.

Paul Curzon, Queen Mary University of London

More on …

- Lego Computer Science

- Part of a series featuring featuring pixel puzzles,

compression algorithms, number representation,

gray code, binary and computation.

- Part of a series featuring featuring pixel puzzles,

Subscribe to be notified whenever we publish a new post to the CS4FN blog.