Mary Edwards was a computer, a human computer. Even more surprisingly for the time (the 1700s), she was a female computer (and so was her daughter Eliza).

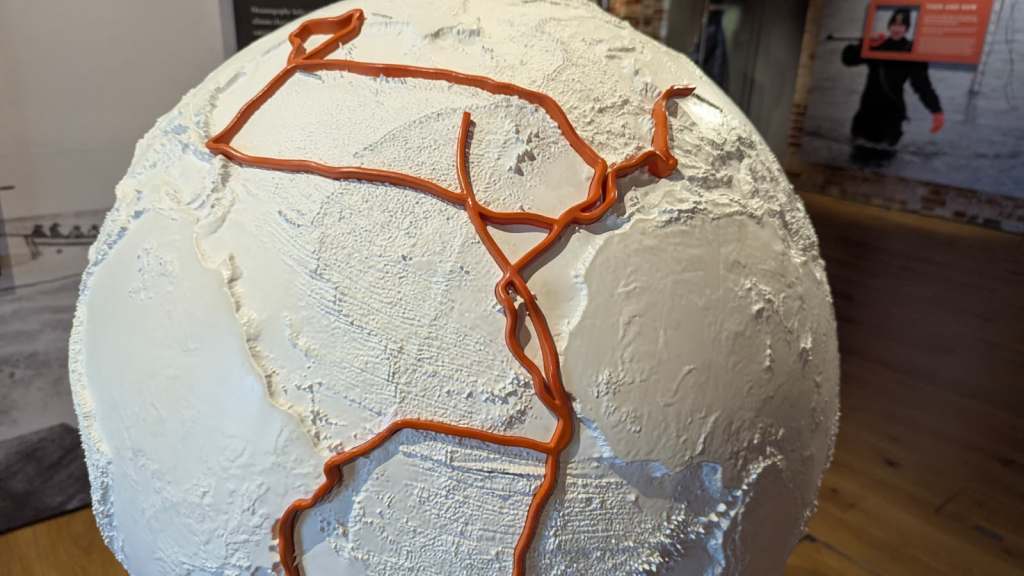

In the early 1700s navigation at sea was a big problem. In particular, if you were lost in the middle of the Atlantic Ocean, there was no good way to determine your longitude, your position east to west. There was of course no satnavs at the time not least because there would be no satellites for 300 years!

It could be done based on taking sightings of the position of the sun, moon or planets, at different times of the day, but only if you had an accurate time. Unfortunately, there was no good way to know the precise time when at sea. Then in the mid 1700s, an accurate clock that could survive a rough sea voyage and still be highly accurate was invented by clockmaker John Harrison. Now the problem moved to helping mariners know where the moon and planets were supposed to be at any given time so they could use the method.

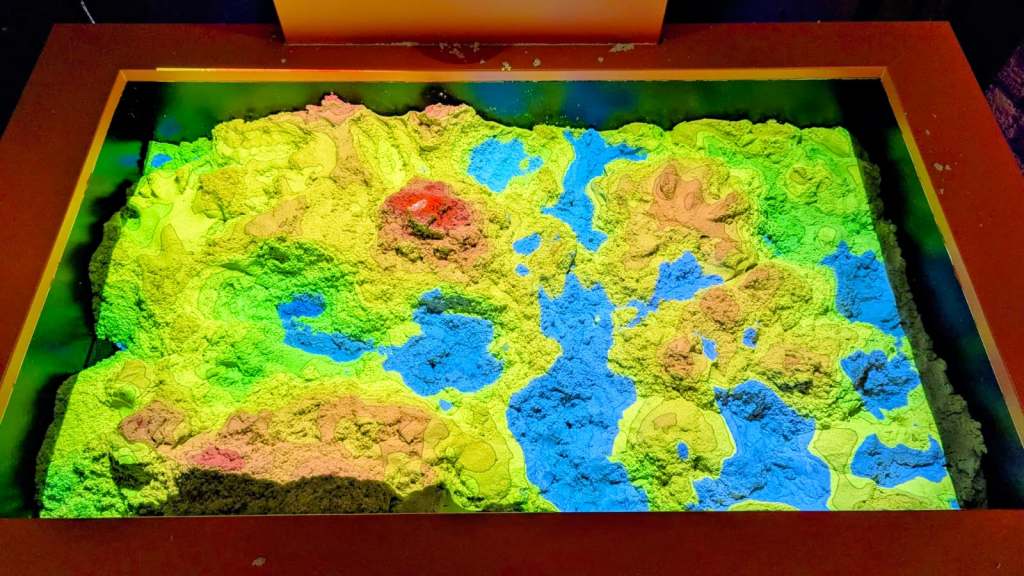

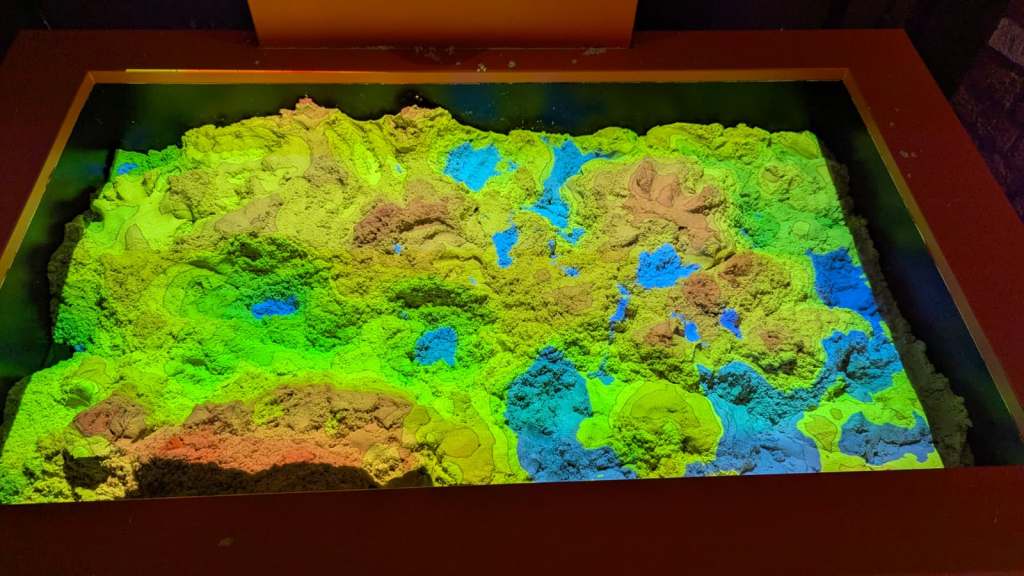

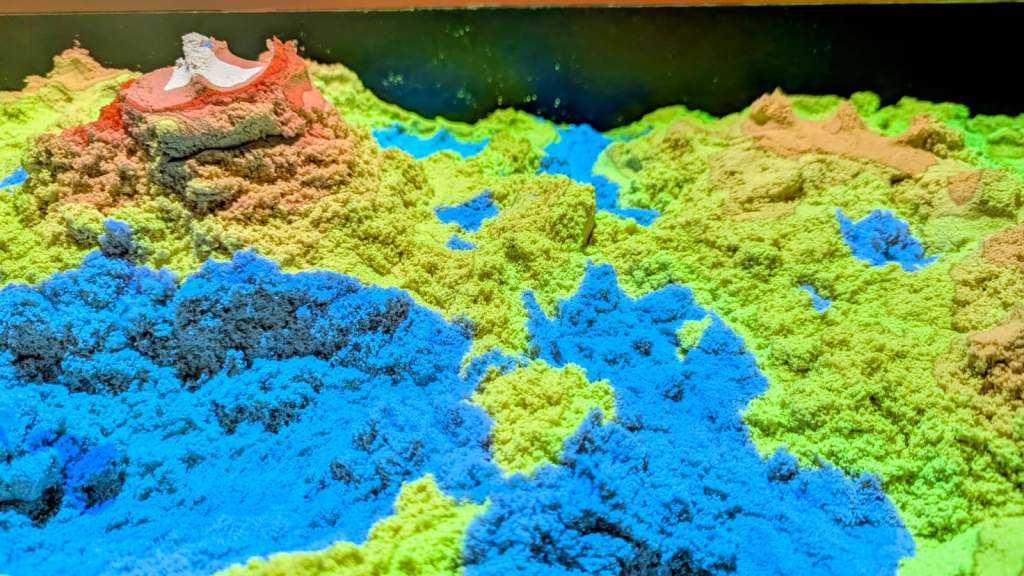

As a result, the Board of Longitude (set up by the UK government to solve the problem) with the Royal Greenwich Observatory started to publish the Nautical Almanac from 1767. It consisted lots of information of such astronomical data for use by navigators at sea. For example, it contained tables of the position of the moon (or specifically its angle in the sky relative to the sun and planets (known as lunar distances). But how were these angles known years in advance to create the annual almanacs? Well, basic Newtonian physics allow the positions of planets and the moon to be calculated based on how everything in the solar system moves together with their positions at a known time. From that their position in the sky at any time can be calculated. That answers would be in the Nautical Almanac. Each year a new table was needed, so the answers also needed to be constantly recomputed.

But who did the complex calculations? No calculators, computers or other machines that could do it automatically would exist for several hundred years. It had to be done by human mathematicians. Computers then were just people, following algorithms, precisely and accurately, to get jobs like this done. Astronomer Royal, Nevil Maskelyne recruited 35 male mathematicians to do the job. One was the Revd John Edwards (well-educated clergy were of course perfectly capable of doing maths in their spare time!). He was paid for calculations done at home from 1773 until he died in 1884.

However, when he died Maskelyne received a letter from his wife Mary, revealing officially that in fact she had been doing a lot of the calculations herself, and with no family income any more she asked if she could continue to do the work to support herself and her daughters. Given the work had been of high enough quality that John Edwards had been kept on year after year so Mary was clearly an asset to the project, (and given he had visited the family several times so knew them, and was possibly even unofficially aware who was actually doing the work towards the end) Maskelyne was open-minded enough to give her a full time job. She worked as a human computer until her death 30 years later. Women doing such work was not at all normal at the time and this became apparent when Maskelyne himself died and the work stated to dry up. The quality of the work she did do, though, eventually persuaded the new Astronomer Royal to continue to give her work.

Just as she helped her husband, her daughter Eliza helped her do the calculations, becoming proficient enough herself that when Mary died, Eliza took over the job, continuing the family business for another 17 years. Unfortunately, however, in 1832, the work was moved to a new body called ‘His Majesty’s Nautical Almanac Office’ At that point, despite Mary and Eliza having proved they were at least as good as the men for half a century or more, government imposed civil service rules came into force that meant women could no longer be employed to do the work.

Mary and Eliza, however had done lots of good, helping mariners safely navigate the oceans for very many years through their work as computers.

More on …

Subscribe to be notified whenever we publish a new post to the CS4FN blog.

This blog is funded by EPSRC on research agreement EP/W033615/1.