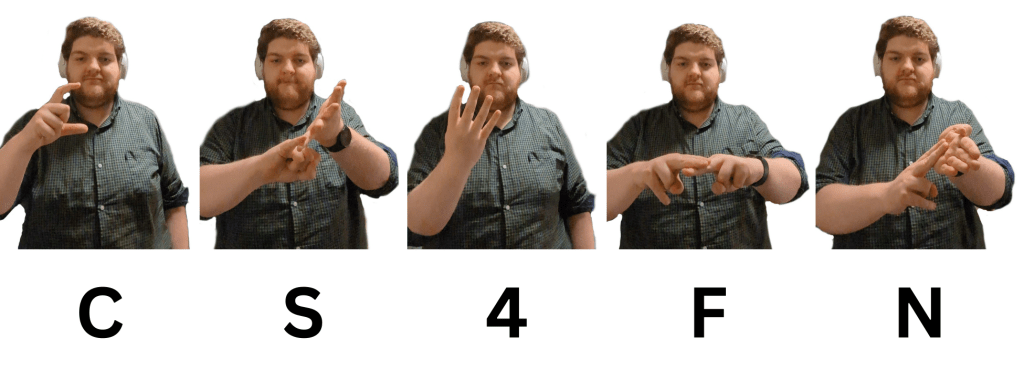

Can a machine learn from its mistakes, until it plays a game perfectly, just by following rules? Donald Michie worked out a way in the 1960s. He made a machine out of matchboxes and beads called MENACE that did just that. Our version plays the game Ladder and is made of cups and sweets. Punish the machine when it loses by eating its sweets!

Let’s play the game, Ladder. It is played on a board like a ladder with a single piece (an X) placed on the bottom rung of the ladder. Players take it in turns to make a move, either 1, 2 or 3 places up the ladder. You win if you move the piece to the top of the ladder, so reach the target. We will play on a ladder with 10 rungs as on the right (but you can play on larger ladders).

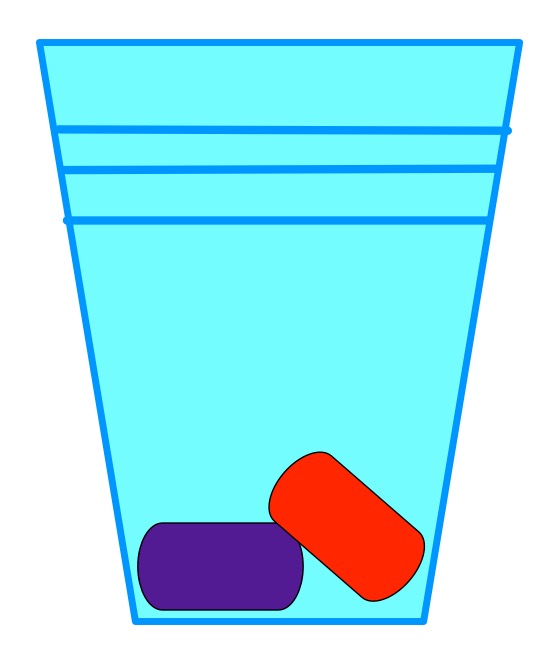

To make the learning machine, you need 9 plastic cups and lots of wrapped sweets coloured red, green and purple. Spread out the sheets showing the possible board positions (see below) and place a cup on each. Put coloured sweets in each cup to match the arrows: for most positions there are red, green and purple arrows, so you put a red, green and purple sweet in those cups. Once all cups have sweets matching the arrows, your machine is ready to play (and learn).

The machine plays first. Each cup sits on a possible board position that your machine could end up in. Find the cup that matches the board position the game is in when it is its go. Shut your eyes and take a sweet at random from that cup, placing it next to the cup. Make the move indicated by the arrow of that colour. Then the machine’s human opponent makes a move. Once they have moved the machine plays in the same way again, finding the position and taking a sweet to decide its move. Keep playing alternately like this until someone wins. If the machine ends up in a position with no sweets in that cup, then it resigns.

If the machine loses, then eat the sweet corresponding to the last move it made. It will never make that mistake again! Win or lose, put all the other sweets back.

Now, play lots of games like that, punishing the machine by eating the sweet of its last move each time it loses. The machine will play badly at first. It’s just making moves at random. The more it loses, the more sweets (losing moves) you eat, so the better it gets. Eventually, it will play perfectly. No one told it how to win – it learnt from its mistakes because you ate its sweets! Gradually the sweets left encode rules of how to win.

Try slightly different rules. At the moment we just punish bad moves. You could reward all the moves that led to it by adding another sweet of the same colour too. Now the machine will be more likely to make those moves again. What other variations of rewards and punishments could you try?

Why not write a program that learns in the same way – but using data values in arrays to represent moves instead of sweets. Not so yummy!

– Paul Curzon, Queen Mary University of London

Subscribe to be notified whenever we publish a new post to the CS4FN blog.

This blog is funded by EPSRC on research agreement EP/W033615/1.